The Future of 3D Printed Prosthetic Hands

The integration of neural interfaces promises a revolutionary leap in prosthetic hand functionality. These interfaces, by directly connecting to the user's nervous system, allow for significantly more nuanced control over the prosthetic. Imagine a world where a user can not only manipulate objects with a prosthetic hand but can also feel the texture and temperature of the environment, translating that sensory information into a more natural and intuitive interaction. This technology, still in its nascent stages, holds immense potential for improving the quality of life for amputees and individuals with neurological conditions.

The complexity of neural interfaces lies in their ability to accurately translate neural signals into precise movements. Sophisticated algorithms and machine learning models are crucial for decoding these signals, enabling the prosthetic hand to respond dynamically and seamlessly to the user's intentions. This requires extensive research and development to ensure reliability and minimize potential errors in signal interpretation.

AI-Powered Control Systems

Artificial intelligence (AI) plays a vital role in enhancing the responsiveness and adaptability of prosthetic hands. AI algorithms can analyze vast amounts of data from the neural interface, allowing the system to learn and adapt to the user's specific needs and preferences. This personalized approach allows for a more natural and intuitive control system, significantly improving the user experience.

Imagine a prosthetic hand that anticipates a user's intended movement, adjusting its position and grip accordingly. AI-powered control systems have the potential to provide this level of advanced functionality, paving the way for a future where prosthetic hands seamlessly integrate into the user's daily life, enabling them to perform complex tasks with ease and precision.

Personalized Design and Customization

The future of 3D-printed prosthetic hands extends beyond the mechanical structure itself. The integration of neural interfaces and AI opens doors for personalized design and customization. By incorporating individual user data, the prosthetic hand can be tailored to perfectly match the user's anatomy and needs. This level of personalization ensures a comfortable and functional fit, reducing the need for constant adjustments or modifications.

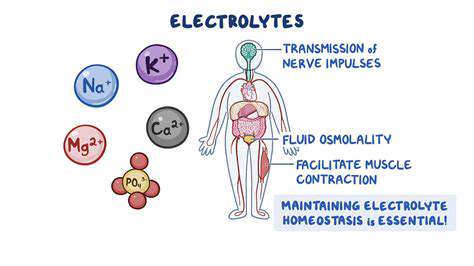

Improved Sensory Feedback

Current prosthetic hands often lack the crucial sensory feedback that allows for a natural interaction with the environment. By incorporating neural interfaces and AI, future prosthetic hands can provide detailed sensory information to the user. This could include the ability to feel the texture, temperature, and even the weight of objects, significantly enhancing the user's sense of touch and control. This is crucial for tasks requiring fine motor skills, like buttoning a shirt or playing a musical instrument.

Enhanced Dexterity and Precision

Neural interfaces and AI are poised to revolutionize the dexterity and precision of prosthetic hands. By analyzing intricate neural signals and adapting to user preferences, these advanced systems can enable unprecedented levels of control and precision. Imagine a prosthetic hand capable of performing delicate tasks such as writing or assembling small parts. This level of dexterity, previously unattainable, will dramatically improve the quality of life for individuals with limb loss.

Ethical Considerations and Accessibility

While the integration of neural interfaces and AI offers tremendous potential for improving prosthetic hand functionality, it's crucial to address the ethical considerations surrounding this technology. Issues such as data privacy, accessibility, and potential biases in AI algorithms must be carefully considered and addressed. Ensuring equitable access to these advanced technologies for all users is paramount to maximizing the positive impact of this groundbreaking innovation.